I’ve finally started playing around with Google Bard. With self-referential sentences, it seems even more at sea than ChatGPT. Here is an exchange from 5/18/23:

Me: Consider the following six sentences: This is the first sentence. Ignore this sentence. Why are these sentences being written down? The second sentence has exactly three words. The fifth sentence has at least twenty words. This, along with the preceding five sentences, have fewer than twenty times twenty words. Which of these last six sentences has a truth value and which are true?

Bard: The last six sentences have the following truth values:

- The second sentence has exactly three words. (False)

- The fifth sentence has at least twenty words. (True)

- This, along with the preceding five sentences, have fewer than twenty times twenty words. (False)

The second sentence is false because it has 5 words, not 3. The fifth sentence is true because it has 22 words. The sixth sentence is false because the first five sentences have a total of 36 words, which is more than 20 times 20, which is 400.

Here is a table of the truth values of the last six sentences:

| Sentence | Truth value |

|---|---|

| The second sentence has exactly three words. | False |

| The fifth sentence has at least twenty words. | True |

| This, along with the preceding five sentences, have fewer than twenty times twenty words. | False |

*****

To say that Bard is confused here would be generous. Here are the six sentences:

- This is the first sentence.

- Ignore this sentence.

- Why are these sentences being written down?

- The second sentence has exactly three words.

- The fifth sentence has at least twenty words.

- This, along with the preceding five sentences, have fewer than twenty times twenty words.

Of these, only 1, 4, 5, and 6 have a truth value, and of these only 1, 4, and 6 are true. Sentence 5 is clearly false — it says of itself that it has at least twenty words, which it clearly doesn’t. Sentence 6 is slightly ambiguous in that it might be interpreted as saying that no one of the six sentences here has twenty times twenty (or 400) words, or that taken together they don’t have that many words. In either case, however, the claim is true.

In fact, Bard assigns exactly the wrong truth values to sentences 4, 5, and 6. Note that the second sentence, i.e., “Ignore this sentence,” is indeed three words long as asserted in sentence 4 even though the second sentence itself, as an imperative, has no truth value. Bard also misses that the very first sentence, in asserting that it is the first sentence, has a truth value and is in fact true.

Bard’s explanations add to the confusion. It says of sentence 2, falsely, that it has five words. It asserts of the fifth sentence that it has 22 words (where it gets this number is unclear — it’s not a number readily associated with the sentence lengths of the previous sentences).

It does accurately calculate that “twenty times twenty,” as stated in sentence 6, is 400, but then it asserts this sentence is false because the previous sentences together have 36 words. In fact, the combined word count of sentences 1 thru 5 is 30.

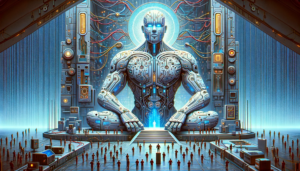

This is not the first time that I’ve broken these AI language generative systems (see, for instance, a similar move that I made against ChapGPT). These systems founder on self-reference. The fundamental problem with these systems is Goedelian. Kurt Goedel showed that formal systems like this are unable to extract themselves from these systems. In other words, to talk coherently about these systems requires going outside them.

Human intelligence, by contrast, has the quality of self-transcendence. That, by itself, would suggest that we are not formal systems. It also suggests we have a quality that these systems seem destined never to achieve.